These are the steps that have to be followed in order to install python in windows and to be able to install pip commands directly into your command prompt just like mac and ubuntu.

STEP 1

- check your system settings and find out your system is 32 bit or 64 bit.

- Accordingly, go to anaconda and download the Individual edition Python 3 (32 or 64bit )(which is open source and completely free).

- Install the anaconda package just like normal software.

- During installation, you will get an option under “advanced options” Add Anaconda to my PATH variable” make sure it is checked.

STEP 2

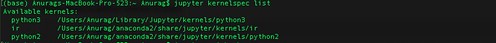

- After installation, you’ll find anaconda prompt in your all programs

- open it, and go to the directory where you will host/save your project/program using command “cd“

- In case you have to create a folder for your project you can make a new directory with command “mkdir foldername”

- after getting into the directory use this command “conda create –name mydevelopment python==3.7.6”

- press enter and say yes if it asks your permission.

- after this, you’ll get commands to activate & deactivate (copy/write those commands in a notepad)

- now type “conda activate mydevelopment”

- you’ll see (mydevelopment) in your shell.

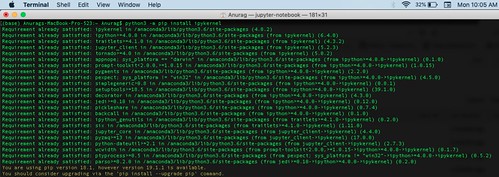

- now you can easily use pip install and ls command in your system.

Step 3

- you are done

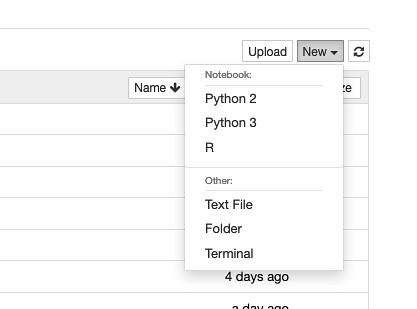

- you can access the jupyter notebook, spyder from the anaconda navigator from all programs list

- additional info to activate the environment if you need to install packages just like you do in Mac and Linux(ubuntu)

- simply go to the same directory and type“conda activate mydevelopment”

- to deactivate simply type “conda deactivate”