11 Data Mining Algorithms

1. Regression & Classification

- Linear

- Multivariate Linear

- Logistic

- Softmax

- Vectorization

- Gradient Calculation

- Stochastic Gradient Descent (SGD)

- Optimizers and Objectives

2. Regularization

- Ridge regression

3. Clustering

k – Means- EM Algorithms

4. Unsupervised Learning

- Autoencoders

- PCA Whitening

- sparse coding

5. Neural Network

- Perceptrons

- Backpropagation

- Restricted Boltzmann Machines

- Learning Vector Quantization

6. Deep Learning

- Stacked Autoencoders

- Convolution Neural Networks (Feature Extraction, Pooling)

- Deep Boltzmann Machines

- Deep Belief Networks

7. Decision Trees

ID3- C4.5

- CART (Classification and regression tree)

- Random Forests

8. Bayesian

Naïve Bayes- Gaussian Naïve Bayes

- Bayesian Networks

- Conditional Random Fields

- Hidden Markov Models

9. Others

- Support Vector Machines

- Evolutionary Methods

- Reinforcement Learning

- Conditional Random Fields

10. Dimensionality Reduction

- PCA

11. Ensemble Methods

- Boosting

- Bagging

- Adaboost

Best Data Visualization Resources

Data Visualization Websites Online

- Juice Analytics

- Silk

- Datawrapper

- Polychart

- Plotly

- DataHero

- Number Picture

- Weave

- Datavisual

- Zoomdata (via the cloud platforms)

- RAW

Software

- Tableau

- SAP Lumira (including a free Personal Edition version)

- Microsoft Excel (or any other spreadsheet that includes charts)

- ClearStory

- BeyondCore

- Mathematica

- MATLAB

- MatPlotLib (if you are comfortable programming Python)

- R Programming Language

- ggplot2

Top Ten Places where AI and Machine Learning make our Life Easier

Creativity to make our surrounding automatic is our one and only aim left. Day by Day AI and Machine Learning automating more and more parts of our life.

We all have heard about AI thanks to movies for its introduction, but what about Machine Learning/ML. ML is the buzzword for most of us. Basically, ML makes computer to learn.

In a nut shell, ML is similar to our very first learning part of our childhood. We have a book containing a lot of pictures of fruits, animals, vegetables, and trees. These are teaching data set for any child. That data will be used to answer a question.For example, a picture is given to a child and he/she has to identify that pictures based on pictures saved in his/her mind. It is what the ML. ML continues to update its teaching data set based on correctly or incorrectly credits:http://www.parlezwireless.com/

In a nut shell, ML is similar to our very first learning part of our childhood. We have a book containing a lot of pictures of fruits, animals, vegetables, and trees. These are teaching data set for any child. That data will be used to answer a question.For example, a picture is given to a child and he/she has to identify that pictures based on pictures saved in his/her mind. It is what the ML. ML continues to update its teaching data set based on correctly or incorrectly credits:http://www.parlezwireless.com/

identification of things and get smarter and intelligent at completing its tasks over time. If you have used Google, Netflix, Amazon, Gmail, then you have interacted with machine learning (ML).

- Recommendations

I am sure about recommendation type of thing if we use services like YouTube, Amazon or Netflix. Every click being monitored and recorded. Driven by Intelligent machine learning, these sites analyze our activity and compare it to the millions of other users to “recommend” or “suggest” other similar videos, products or films that we might like.

I am sure about recommendation type of thing if we use services like YouTube, Amazon or Netflix. Every click being monitored and recorded. Driven by Intelligent machine learning, these sites analyze our activity and compare it to the millions of other users to “recommend” or “suggest” other similar videos, products or films that we might like. - Online Search

AI is transforming Google and other search engine results by watching our response to result display. We click the results show on the very first page and we are done because we found what we are looking for. If not, then we go to the second page or refine our query at this point we assume that search engine didn’t understand what we want, so it learns its mistake and shows the better result in the near future.

AI is transforming Google and other search engine results by watching our response to result display. We click the results show on the very first page and we are done because we found what we are looking for. If not, then we go to the second page or refine our query at this point we assume that search engine didn’t understand what we want, so it learns its mistake and shows the better result in the near future. - In Hospitals

credits: http://assets.fastcompany.com

credits: http://assets.fastcompany.com

Due to its nature of analyzing vast amounts of data, ML takes the first place to process information and spot more pattern like cancer or eye diseases than a human can by several orders of magnitude.Computer-aided diagnosis (CAD) can help radiologists find early-stage breast cancers that might otherwise be missed, and it can identify 52% of these missed cancers roughly a year before they were actually detected. Zebra Medical Systems is an Israeli company that applies advanced machine learning techniques to the field of radiology. It has amassed a huge training set of medical images along with categorization technology that will allow computers to predict multiple diseases with better-than-human accuracy. In 2016, the company unveiled two new software algorithms to help predict, and even prevent, cardiovascular events such as heart attacks. - Data Security

According to Kaspersky, between January and September, 2016 ransomware attacks on business increased from once every 2 minutes to once every 40 seconds. Symantec also reported high levels of ransomware attacks, over 50,000 in March 2016 alone. A report by Osterman Research indicates 47% of organizations in the US in 2016 had been targeted at least once. A survey in the UK suggested 54% of businesses had been attacked at least once. Friday, May 12, 2017, saw one of the largest most widespread attacks to date – the WannaCry ransomware. According to Deep Instinct new malware tends to have almost the same code as the previous one only 2 to 10% changes. Due to the slight change in code ML can predict which files are malware or not with great accuracy. - Email spam filtering

According to Computer World magazine, the average employee gets 13 spam messages a day – and over 80 percent of all the email messages zipping around the Internet are spam. Microsoft founder Bill Gates is the most spammed man in the world, with 4m emails arriving in his inbox each day. All credit goes to ML which filter all emails and classify them into spam and not spam. credits:http://www.asistiletisim.com

According to Computer World magazine, the average employee gets 13 spam messages a day – and over 80 percent of all the email messages zipping around the Internet are spam. Microsoft founder Bill Gates is the most spammed man in the world, with 4m emails arriving in his inbox each day. All credit goes to ML which filter all emails and classify them into spam and not spam. credits:http://www.asistiletisim.com - Marketing Personalization

Personalized marketing is the ultimate form of targeted marketing. To sell more we have to serve better and to serve better we have to understand customers. This is the base idea behind marketing personalization. Companies can personalize customer emails, which products will show up as recommended, offer they see, coupons and so on, these are just the tip of the iceberg. All above things are achieved by the advance ML algorithm. - Fraud Detection

ML and AI are used and become better day by day at spotting potential cases of fraud or anomaly detection across many different fields. The Royal Bank of Scotland (RBS) for example, is using machine learning to fight money laundering. Companies have a lot of data and they use ML to compare millions of transactions and can precisely distinguish between legitimate and fraudulent transactions between buyers and sellers. - Natural Language Processing (NLP)

Virtual personal assistants – likes of Siri, Alexa, Cortana and Google Assistant – are able to follow instructions because of voice recognition and it is NLP. NLP process human speech and match it to best-desired command and respond it in a natural way. - Financial Trading

At its heart, Financial trading is no different to any other form of trading: it is about buying and selling in the hope of making a profit. Here comes its beauty “Predict what the stock market will do on any given day”. Again ML wins the game of prediction with a very close margin. ML helps many prestigious trading firms to execute trades at very high-speed and high volume for prediction. ML throws human out of a window in consuming the vast amount of data at a very fast pace.

At its heart, Financial trading is no different to any other form of trading: it is about buying and selling in the hope of making a profit. Here comes its beauty “Predict what the stock market will do on any given day”. Again ML wins the game of prediction with a very close margin. ML helps many prestigious trading firms to execute trades at very high-speed and high volume for prediction. ML throws human out of a window in consuming the vast amount of data at a very fast pace. - Smart Cars

Of all the uses for machine learning, one of the most exciting ones i.e Smart Cars. A recent IBM survey of top auto executives saw some 74% of these stating they expected credits:http://ichef.bbci.co.uk

Of all the uses for machine learning, one of the most exciting ones i.e Smart Cars. A recent IBM survey of top auto executives saw some 74% of these stating they expected credits:http://ichef.bbci.co.uk

there would be smart cars on the roads by 2025.Smart cars are integrated with IoT, ML and AI which help car to do many fantastic things by own like learn their owners and environment, adjust internal settings, report and even fix problem, offer real time advice about traffic and road conditions and in extreme cases it may even take evasive action to avoid a potential collision.

The biggest big data challenges

Credits: https://blogs-images.forbes.com

We all know that Necessity is the mother of invention and we don’t want to stop at any point in our life because it’s in our gene.

The complex business environment in the world made to invent the concept of big data. Nowadays, data and how to use them make the company different from each other and most important to stay in business. For that companies transform as much as data into a meaningful product with data-driven discoveries for the users. Right analytics on data maximise revenue, improve operations and mitigate risks. According to Demirkan and Dal, big data has following six “V” characteristics i.e Volume, Velocity, Variety, Veracity, Variability and Value. The biggest big data challenges are a bit opaque to see.

IDC predict big data revenue sales will increase more than 50% from nearly $122 billion in 2015 to more than $187 billion in 2019. Nearly 73% of companies increase investing on analytics to transform data into gold but 60 percent of them feel that they don’t have the proper tool to get insight from data. Research predicts that half of all big data projects will fail to deliver desired output.

When Gartner asked what the biggest big data challenges were, the responses suggest that while all the companies plan to move ahead with big data projects, they still don’t have a good idea about what they’re doing and why. The second major concern is not establishing data governance and management. Thomas Schutz, SVP, General Manager of Experian Data Quality. says that “The biggest problem organisations face around data management today actually comes from within,” and “Businesses get in their own way by refusing to create a culture around data and not prioritising the proper funding and staffing for data management.”

There are many challenges but data related issues are biggest challenges in big data.

$19 Trillion Tech Industry Heading Our Way( IoT )

The “Internet of Things” is emerging technology and phrase that 87% of people haven’t heard of. The Internet of things (IoT) is the inter-networking of physical devices, vehicles (also referred to as “connected devices” and “smart devices”), buildings, and other items—embedded with electronics, software, sensors, actuators(MEMs), and network connectivity that enable these objects to collect and exchange data via Internet.

We are surprised to know that ATMs were some of the first IoT objects dated back to 1974.

IoT market has been growing with the parabolic rate that world has never witnessed. IoT could DWARF every technology before it and it’s just begun…Experts estimate that the IoT will consist of almost 50 billion objects by 2020 only in the span of 4 years. It took 40 years to sell 1 billion Personal Computer, 20 years to reach nearly 7 billion cellphone users and 5 years to reach 1 billion tablets. So we can say that the IoT is the future of ALL technology. It’s literally on the pulse of everything shaping the new Internet.

IoT market has been growing with the parabolic rate that world has never witnessed. IoT could DWARF every technology before it and it’s just begun…Experts estimate that the IoT will consist of almost 50 billion objects by 2020 only in the span of 4 years. It took 40 years to sell 1 billion Personal Computer, 20 years to reach nearly 7 billion cellphone users and 5 years to reach 1 billion tablets. So we can say that the IoT is the future of ALL technology. It’s literally on the pulse of everything shaping the new Internet.

In order to work ever IoT device has a piece of software and sensors also known as MEMs(Micro-Electro-Mechanical Systems). Software and MEMs make IoT sense, think and act. We can say that MEMs are eye and ear of IoT and Big Data is the “fuel” that power the IoT.

What makes IoT come from nowhere?

To answer this question we have to go through different views of tech luminaries. Inventor of the Ethernet, Bob Metcalfe thinks “It’s a media phenomenon. Technologies and standards and products and markets emerge slowly, but then suddenly, chaotically, the media latches on and BOOM!—It’s the year of IoT.” Chief Economist at Google, Hal Varian believes that it has something to do with Moore’s Law: “The price of processors, sensors, and networking has come way down. Since WiFi is now widely deployed, it is relatively easy to add new networked devices to the home and office”. The father of sensors, Janus Bryzek thinks there are multiple factors. First, there is the new version of the Internet Protocol, IPv6, “enabling the almost unlimited number of devices connected to networks.” Another factor is that four major network providers Cisco, IBM, GE and Amazon—have decided “to support IoT with network modification, adding Fog layer and planning to add Swarm layer, facilitating dramatic simplification and cost reduction for network connectivity.” and last but not least new forecasts made IoT be as future king of all technologies. IoT will add trillions to global GDP. “This is the largest growth in the history of humans,” says Bryzek.

Who is feeding the sleeping giant?

Who is feeding the sleeping giant?

recent GE survey reveals, 90% of the company implementing IoT as one of their top 3 priorities. In an active move to accommodate new and emerging technological innovation, the UK Government, in their 2015 budget, allocated £40,000,000 towards research into the Internet of things.Warren Buffet has alone invested $13 billion. Barcelona, Spain invested in it and its water system alone saves $58 million annually. Glasgow, Scotland are investing $37 million in this innovation. “DIGIT“(Developing Innovation and Growing the Internet of Things Act ) is the bill representing investing in IoT by American Govt.

IoT in our daily life and in future with limitless opportunities.

The London School of Economics said that “The future is now and [this revolution] is going to disrupt most of the traditional industries”.

It will boost productivity and save companies millions likely billions each and every year.

1.Facebook said that this breakthrough innovation has already saved its data centre by 38%.

2. UPS uses IoT to reduces its fuel consumption by 9 million gallons a 31 million Dollar saving.

3. Driverless cars will generate $1.3 trillion in annual savings in the United States, with over $5.6 trillions of savings worldwide. The number of cars connected to the Internet worldwide will grow more than sixfold to 152 million in 2020 from 23 million in 2013.

3. Driverless cars will generate $1.3 trillion in annual savings in the United States, with over $5.6 trillions of savings worldwide. The number of cars connected to the Internet worldwide will grow more than sixfold to 152 million in 2020 from 23 million in 2013.

4. The global wearable device market has grown 223% in 2015. It exceeds $1.5 billion in 2014, double its value in 2013.

5. Connected Kitchen saves the food and beverage industry as much as 15% annually.

6. Consumer Electronics M2M connections will top 7 billion in 2023, generating $700 billion in annual revenue. By 2020, there will be over 100 million Internet-connected wireless light bulbs and lamps worldwide up from 2.4 million in 2013.

7. Health Care industry University of California Medical centre has a robotic control pharmacy that has dispensed 350K prescription without making one error.

8. Legal System is also using robots. The New York Times reported that the Blackstone Discovery and e-discovery that uses electronic data discovery software. It can analyse 1.5 million documents for less than 100k dollars. A team of paralegal would have charge 2.1 million to review.

8. Legal System is also using robots. The New York Times reported that the Blackstone Discovery and e-discovery that uses electronic data discovery software. It can analyse 1.5 million documents for less than 100k dollars. A team of paralegal would have charge 2.1 million to review.

9. Fast Food: – At Eatsa, a futuristic San Francisco-based vegetarian fast food restaurant. There are no employees. The Customer uses a touch screen to order their food. The meal is ready in a matter of minutes.

10. Retail Industry: – RFID technology help tagging the product so that it can be tracked. With IoT, RFID tags retailers can expect 99% accuracy in inventory and there will be 2 to 7% increase in sale.

These above examples are just the tip of Ice-Berg. These machines are getting smarter and smarter every day starting to essentially think on their own.

This technology is not just a game changer. It is THE game changer. So get ready… The Internet of Things is here to stay.

5 pillars of data scientist career

- Genetically Modified Leadership

- Great Power Great Responsibility

- Big Picture in Big Data

- KISS

- Feedback

- Genetically Modified Leadership:

You and you alone there to guide and lead yourself. To get noticed by the world you have to modify well exactly like GMO food. Tackle weakness and polishing your strengths lead you to greatness. By concurrently being the best mentor and best student you can possibly be, you will bring forth your GM leadership skills. Leadership will remove self-doubt and motivate you with a heightened self-worth. Control and create your own views to be more positive and productive. The daily intensity of the data scientist role can be increasingly stressful because they have data and data is power.

You and you alone there to guide and lead yourself. To get noticed by the world you have to modify well exactly like GMO food. Tackle weakness and polishing your strengths lead you to greatness. By concurrently being the best mentor and best student you can possibly be, you will bring forth your GM leadership skills. Leadership will remove self-doubt and motivate you with a heightened self-worth. Control and create your own views to be more positive and productive. The daily intensity of the data scientist role can be increasingly stressful because they have data and data is power.

2. Great Power Great Responsibility:

Power and responsibility always exist in parallel. Nowadays data is power and here comes the responsibility. So, calm yourself being the highest priority then and only then other thing goes well.

3. Big Picture in Big Data:

In the age of big data, it is essential to converge your environment into manageable points so that you can keep up a big picture perspective.

4. KISS(keep it short and simple):

The KISS principle states that most systems work best if they are kept simple than made complicated. So, keep rules and procedure of any model short and simple so that it will not burn your ass in coming future. And always keep in mind Albert Einstein quotes – “If you can’t explain it simply, you don’t understand it well”. and “Make things as simple as possible, but not simpler.”

5. Feedback:

Here comes the blood of data scientist i.e feedback. After countless efforts, data scientist waited for feedback to come. Feedback acts like oxygen, blood, all things which make you alive every moment. The more feedback the more powerful you and your work will be.

Python Libraries for Data Science

Data Analysis – Machine Learning

Pandas (data preparation)

Pandas help you to carry out your entire data analysis workflow in Python without having to switch to a more domain specific language like R. Practical real world data analysis, reading and writing data, data alignment, reshaping, slicing, fancy indexing, and subsetting, size mutability, merging and joining, Hierarchical axis indexing, Time series-functionality.

See More: Pandas Documentation

Scikit-learn (Machine Learning)

- Simple and efficient tools for implementing Classification, Regression, Clustering, Dimensionality Reduction, Model Selection, Preprocessing.

- Built on NumPy, SciPy, and Matplotlib.

See More: Scikit-learn Documentation

Gensim (Topic Modelling)

Scalable statistical semantics, Analyse plain-text documents for semantic structure and Retrieve semantically similar documents.

See More: Gensim Documentation

NLTK (Natural Language Processing)

Text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries. Working with corpora, categorising text, analysing linguistic structure.

See More: NLTK Documentation

Tables

Package for managing hierarchical datasets which are designed to efficiently cope with large amounts of data. It is built on top of the HDF5 library and the NumPy package and features an object-oriented interface which is fast, extremely easy to use tool for interactively save and retrieve large amounts of data.

See More: Tables Documentation

Deep Learning

Deep Learning is a new area of Machine Learning research, which has been introduced with the objective of moving Machine Learning closer to one of its original goals: Artificial Intelligence.

See More: Deep Learning Documentation

Data Visualization

Seaborn

Seaborn is a Python visualisation library based on Matplotlib. It provides a high-level interface for drawing attractive statistical graphics.

See More: Seaborn Documentation

Matplotlib

It is a Python 2D plotting library which produces publication quality figures in a variety of hardcopy formats and interactive environments across platforms. Matplotlib can be used in Python scripts, the Python and IPython shell, the jupyter notebook, web application servers, and four graphical user interface toolkits.

See More: Matplotlib Documentation

Bokeh

Bokeh is a Python interactive visualisation library that targets modern web browsers for presentation. Its goal is to provide elegant, concise construction of novel graphics in the style of D3.js, and to extend this capability with high-performance interactivity over very large or streaming datasets. Bokeh can help anyone who would like to quickly and easily create interactive plots, dashboards, and data applications.

See More: Bokeh Documentation

Sci-py (data quality)

Python library used for scientific computing and technical computing.

SciPy contains modules for optimisation, linear algebra, integration, interpolation, special functions, FFT, signal and image processing, ODE solvers and other tasks common in science and engineering.

SciPy builds on the NumPy array object and is part of the NumPy stack which includes tools like Matplotlib, pandas and SymPy.

See More: Sci-py Documentation

Big Data/Distributed Computing

Hdfs3

hdfs3 is a lightweight Python wrapper for libhdfs3, to interact with the Hadoop File System HDFS.

See More: Hdfs3 Documentation

Luigi

Luigi is a Python package that helps you build complex pipelines of batch jobs. It handles dependency resolution, workflow management, visualisation, handling failures, command line integration, and much more.

See More: Luigi Documentation

Hfpy

It lets you store huge amounts of numerical data, and easily manipulate that data from NumPy. For example, you can slice into multi-terabyte datasets stored on disk, as if they were real NumPy arrays. Thousands of datasets can be stored in a single file, categorised and tagged however you want. H5py uses straightforward NumPy and Python metaphors, like dictionary and NumPy array syntax. For example, you can iterate over data sets in a file, or check out the .shape or .dtype attributes of datasets.

See More: H5py Documentation

Pymongo

PyMongo is a Python distribution containing tools for working with MongoDB, and is the recommended way to work with MongoDB from Python.

See More: PyMongo Documentation

DASK

Dask is a flexible parallel computing library for analytic computing. Dask has two main components Dynamic task scheduling optimised for computation. This is similar to Airflow, Luigi, Celery, or Make, but optimised for interactive computational workloads.“Big Data” collections like parallel arrays, data frames, and lists that extend common interfaces like NumPy, Pandas, or Python iterators to larger-than-memory or distributed environments. These parallel collections run on top of the dynamic task schedulers.

See More: Dask Documentation

Dask.distributed

Dask.distributed is a lightweight library for distributed computing in Python. It extends both the and concurrent.futures dask APIs to moderate sized clusters. Distributed serves to complement the existing PyData analysis stack to meet the following needs Low latency, Peer-to-peer data sharing, Complex Scheduling, Pure Python, Data Locality, Familiar APIs, Easy Setup.

See More: Dask.distributed Documentation

Security

- cryptography

- pyOpenSSL

- passlib

- requests-oauthlib

- ecdsa

- pycrypto

- oauthlib

- oauth2client

- wincertstore

- rsa

So these are some of the Python Libraries for Data Science, data analysis, Machine Learning, Security and Distributed computing.

If you think i miss out Something, let me know in the comments.

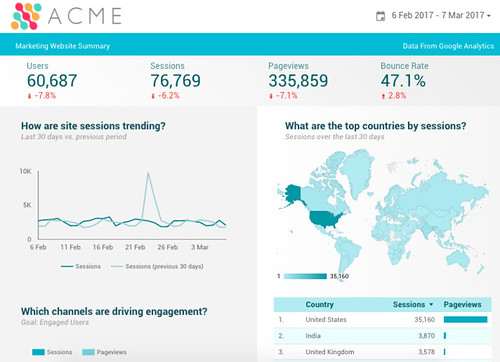

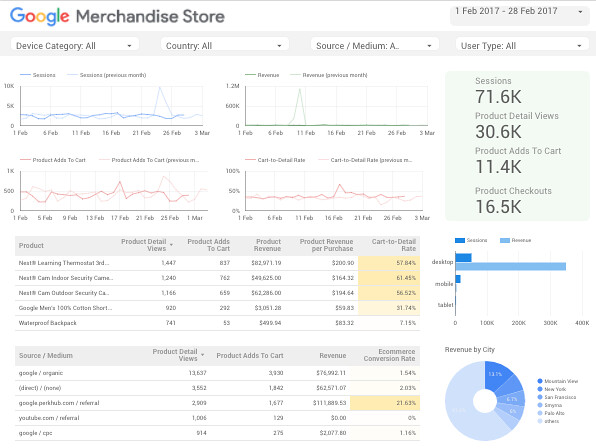

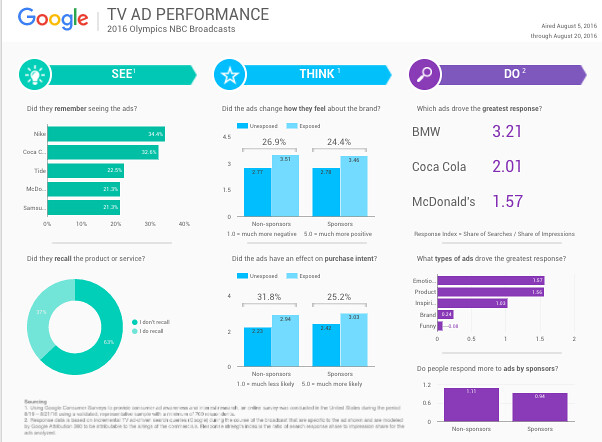

Google Data Studio dashboarding and reporting tool

The new Dashboarding and reporting tool Google Data Studio which was launched in 2016 is now available for free for small business earlier it was limited to 5 data reports, now the limit has been lifted from 5 to unlimited.

Data Studio turns your data into informative Dashboards and Reports which you can read, easy to share, and fully customizable. With Dashboarding you can tell great data stories to support better business decisions.

“ To enable more businesses to get full value from Data Studio we are making an important change — we are removing the 5 report limit in Data Studio. You now create and share as many reports as you need — all for free. “

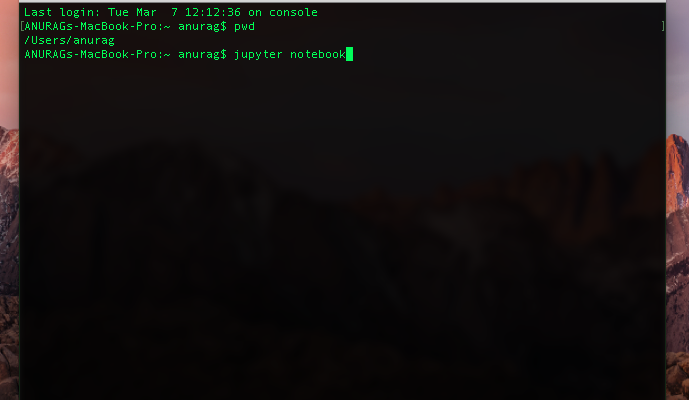

Data Processing Command Line Tools

A series of operations on data, to retrieve, transform or classify information, Also the collection and manipulation of items of data to produce meaningful information are known as Data Processing, Some useful Data Processing Command Line tools.

Alternative to Numpy and pandas that solve real-world problems with readable code.

Python library of HTML to IMG wrapper.

Converts an XML input to a JSON output.

Converts a stream of newline separated json data to csv format.

Read data from image – Optical Character Recognition Library.