Apache spark can easily be deployed in standalone mode, all you need is to Install Spark to Local Cluster. First download the pre-built spark and extract it. After that, open your terminal navigate to the extracted directory of spark from sbin start master.sh after that start slave.sh followed by master spark URL which will be obtained at localhost:8080. Now you have started a cluster manually.

After that, you can start the Spark-shell (for Scala) or Pyspark (for Python) or SparkR (for R) from bin.

- Download pre-built Spark.

- Extract the downloaded Spark built (you can extract spark in either way by terminal or manually).

- From your terminal navigate to the extracted folder, now you have to start master.sh from sbin command: sbin/start-master.sh

- After Master, you need to start slave.sh followed by master spark URL which you’ll get from browser by typing localhost:8080 command: sbin/start-slave.sh <URL>

- After performing step 3 & step 4, you have successfully started the cluster manually.

- Now you’ll be able to start your applications like Spark-shell, pySpark, SparkR for Scala, Python and R from bin. command: bin/spark-shell

- Start writing your code or application.

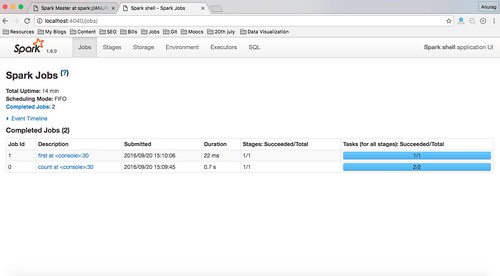

Screenshots of Standalone Mode

for quick basic tutorial referred to official guide.